Hugging GPT

Hugging GPT operates on a straightforward yet powerful principle: using a large language model as a central controller to assign tasks to specialized models within the Hugging Face ecosystem. Think of it as a project manager, efficiently delegating tasks to the most skilled team members based on their specific expertise.

Price: Free

Operating System: Web Application

Application Category: Artificial Intelligence

4

The field of artificial intelligence (AI) has seen tremendous progress in recent years, with large language models (LLMs) like GPT-3 showcasing impressive abilities in understanding and generating human-like text. However, these models often excel in specific tasks, which can limit their overall versatility.

This is where HuggingGPT comes into play, introducing an innovative approach that connects LLMs with the extensive ecosystem of models available on the Hugging Face platform.

What is Hugging GPT?

Hugging GPT operates on a straightforward yet powerful principle: using a large language model as a central controller to assign tasks to specialized models within the Hugging Face ecosystem. Think of it as a project manager, efficiently delegating tasks to the most skilled team members based on their specific expertise.

| Category | Details |

|---|---|

| AI Tool | HuggingGPT |

| Category | Multimodal AI System |

| Feature | LLM with specialized models for complex tasks |

| Accessibility | https://huggingface.co/spaces/microsoft/HuggingGPT |

| Launch Date | April 2023 |

| Team | Yongliang Shen, Kaitao Song, Xu Tan, Dongsheng Li, Weiming Lu, Yueting Zhuang |

| Cost | Free, with open-source components |

| Official LinkedIn | Click Here |

HuggingGPT Working

1. User Input:

The user provides a request or instruction, which could range from summarizing text to generating code or creating images.

2. LLM Analysis:

The central LLM, such as GPT3.5, analyzes the user’s request to understand the intended task and required capabilities.

3. Model Selection:

Based on its analysis, the LLM identifies the most suitable model or combination of models from the HuggingFace platform to execute the task effectively.

4. Task Execution:

The selected model(s) perform the assigned task, leveraging their specific training and expertise.

5. Output Generation:

The results from the chosen models are then compiled and delivered back to the user in a comprehensive and understandable format.

Advantages of the HuggingGPT

HuggingGPT offers several advantages over traditional LLM-based systems:

Versatility and Adaptability:

By using the diverse range of models available on Hugging Face, HuggingGPT can manage a broad spectrum of tasks without needing individual fine-tuning for each one. This adaptability makes it an invaluable tool for various applications across different domains.

Improved Efficiency and Resource Management:

Instead of training a single LLM to handle every task, HuggingGPT efficiently delegates tasks to specialized models, optimizing resource utilization and reducing computational costs.

Community-Driven Development:

Hugging Face is an open-source platform that fosters collaboration and knowledge sharing within the AI community. This collaborative environment drives continuous improvement and expansion of HuggingGPT’s capabilities.

Reduced Bias and Increased Fairness:

Using diverse models trained on various datasets helps mitigate biases that can emerge within individual LLMs, resulting in fairer and more inclusive outcomes.

HuggingGPT

HuggingGPT is an AI framework that integrates large language models with specialized models from the Hugging Face platform to solve complex, multimodal tasks across various domains.

Potential Applications of HuggingGPT

The versatility of HuggingGPT opens doors to numerous applications across various sectors:

Education: Automating tasks such as grading essays, providing personalized feedback, and generating engaging learning materials.

Research: Streamlining literature reviews, summarizing research papers, and assisting with data analysis.

Content Creation: Generating creative content such as poems, scripts, musical compositions, and even realistic images.

Customer Service: Developing intelligent chatbots capable of understanding complex requests and providing accurate information or solutions.

Software Development: Assisting with code generation, debugging, and documentation.

Accessibility: Creating tools for people with disabilities, such as real-time sign language translation or text-to-speech applications.

- Task Planning

- Model Selection

- Task Execution

- Response Generation

- Vision Tasks

- Speech Processing

Challenges and Future Development

Despite its potential, HuggingGPT also faces some challenges:

- LLM Limitations: The effectiveness of HuggingGPT largely depends on the controlling LLM’s ability to accurately interpret user intent and select the appropriate models.

- Technical Complexity: Integrating various models and ensuring seamless communication between them requires a robust technical infrastructure and significant expertise.

- Ethical Considerations: As with any AI system, it is essential to address potential biases and ensure the responsible use of HuggingGPT to prevent unintended consequences.

Further development of HuggingGPT could involve:

Enhanced LLM Capabilities: Improving the controlling LLM’s ability to better understand context, nuances, and user intent.

Expanding the Model Ecosystem: Continuously adding new models to the Hugging Face platform to broaden HuggingGPT’s capabilities.

Developing Explainability and Interpretability: Making it easier for users to understand how HuggingGPT arrives at its results, fostering trust and transparency.

FAQs about HuggingGPT:

1. Is HuggingGPT open-source?

Yes, HuggingGPT is built on the open-source Hugging Face platform, promoting community-driven development and collaboration.

2. What types of tasks can HuggingGPT handle?

HuggingGPT can manage a wide array of tasks, including text summarization, question answering, code generation, image captioning, translation, and more. Its capabilities are continually expanding as new models are added to the Hugging Face platform.

3. How does HuggingGPT work?

HuggingGPT uses an LLM as a central controller to break down user requests into smaller tasks, select appropriate models from the Hugging Face ecosystem, execute the tasks, and then combine the results to generate a comprehensive response.

4. What are the advantages of using HuggingGPT?

HuggingGPT offers the flexibility to handle complex and multimodal AI tasks by combining the strengths of various expert models.

5. Are there any limitations to HuggingGPT?

Some limitations include potential inefficiencies due to multiple interactions with LLMs, context length restrictions, and the reliability of the system

Introduction

It’s a new large language model, but much more than that—it’s called HuggingGPT, and it’s by the company Hugging Face. If you’re not familiar with Hugging Face, it’s a website that hosts a large array of different large language models, machine learning models, and a whole bunch of different libraries and tools to help you build artificial intelligence. It’s just a great community to be a part of if you want to stay on the cutting edge of artificial intelligence.

But today, we’re going to be talking about their recently announced HuggingGPT and why I’m so excited about it.

HuggingGPT

The reason I’m so excited about HuggingGPT is that it actually promises to bring all of these disparate large language models and machine learning models together in one giant toolset controlled by AI.

They are claiming that it’s actually one step closer to AGI (Artificial General Intelligence). The way to think about it is HuggingGPT is kind of like this central brain, and it has an enormous toolset of large language models and machine learning models at its disposal to use for different tasks from the user.

For example, if I’m the user and I want to say, “Hey, summarize this PDF,” it’s going to need to not only be able to extract the data from the PDF, possibly images from the PDF, but also the text from the PDF. That could be a multi-step process to actually return the results the user is expecting. So, let’s talk a little bit about the paper and why it’s so important.

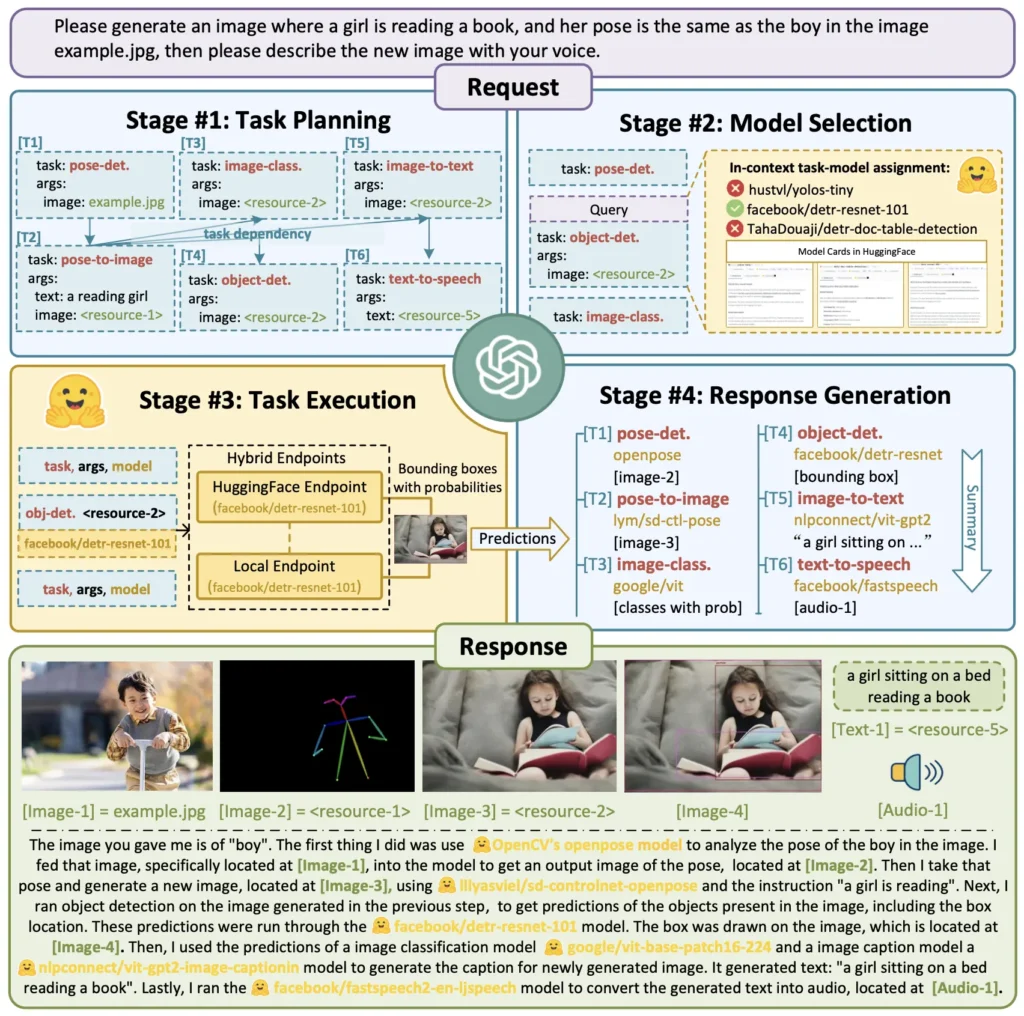

Here’s the paper, and in the abstract, the first line says it all: solving complicated AI tasks with different domains and modalities is a key step toward Artificial General Intelligence. What they’re saying is that large language models today are primarily for text, which is great, but we’re going to need video, audio, and all these different formats brought into one place.

Typically, for a single large language model to do that, it’s really difficult—they tend to get distracted, and it’s very challenging to train. What they’re proposing is they have a central brain or almost a dispatch where they can tap all of these other large language models and artificial intelligence tools to help with the user’s prompt, even if it’s extremely complicated, multi-step, and multimodal.

The paper also mentions that large language models (LLMs) could act as a controller to manage existing AI models to solve complicated AI tasks. It’s really cool to think about—one model controlling many other models, and that can be extremely powerful.

How It Works

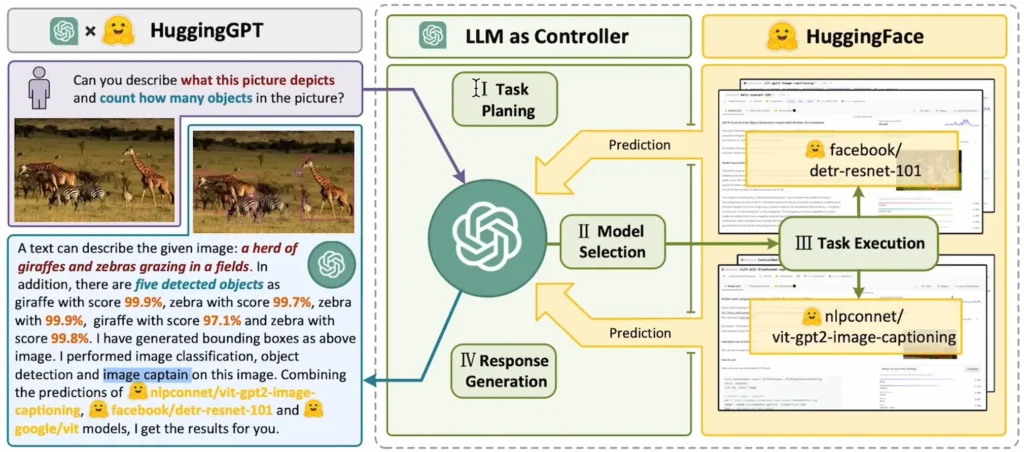

Let’s look at this graphic here because it really speaks to what is possible with HuggingGPT. In this image, they’re given a prompt: “Can you describe what this picture depicts and count how many objects are in the picture?” It’s going to need to do a lot of different analyses on this picture to get to that prompt result.

Here, it says that a text can describe the given image: “A herd of giraffes and zebras grazing in fields.” So first, it has to understand the image and understand what the contents of the image are. Next, it says there are five detected objects: giraffe, zebra, zebra, giraffe, and zebra. Additionally, it has generated bounding boxes in the above image.

With this, it’s done image classification, object detection, and image captioning—combining three different libraries all into one response. HuggingGPT performs four different steps when it receives a response: task planning, model selection, task execution, and response generation.

- Task Planning: It understands the prompt and starts to plan out what tools are needed to execute the prompt.

- Model Selection: It looks through all the different models it has access to and chooses the ones it thinks will be needed for the job.

- Task Execution: It uses all of those different models, grabs the data it needs, and ingests it back.

- Response Generation: It summarizes or boils down all that data into a response to give back to the user.

This is really awesome stuff! The paper also talks about generalized models being really good at a lot of things, but specialized models being better at specialized tasks. For some challenging tasks, LLMs demonstrate excellent results in zero-shot or few-shot settings, but they are still weaker than some experts. Experts, in this case, are fine-tuned models.

The paper points out that in order to handle complicated AI tasks, LLMs should be able to coordinate with external models to utilize their powers. The generalized model should be the orchestrator, and the specific fine-tuned models should do what they’re best at, which are specific tasks for very specific queries.

Integrations

Another highlight is that HuggingGPT has integrated hundreds of models on Hugging Face. They’ve already integrated hundreds, which is really cool, and they’ve integrated it all around ChatGPT. ChatGPT from OpenAI is still that central brain, the dispatch, or the orchestrator, if you will.

They cover 24 tasks such as text classification, object detection, semantic segmentation, image generation, question answering, text-to-speech, and text-to-video.

For instance, let’s say I need to put together a presentation about seagulls, and I want it to be a multimedia presentation. First, it needs to actually do the research on seagulls. Then, it needs to put together a PDF, potentially with images.

This probably wouldn’t be possible from a single generalized model, whereas a single general model orchestrating a bunch of fine-tuned models for each of these individual subtasks would do it really well.

HuggingGPT uses a large language model as an interface to route user requests to expert models. Again, it’s like that orchestration layer or a router. HuggingGPT is not limited to visual exception tasks but can address tasks in any modality or domain.

Conclusion

Lastly, I want to talk about how they do this. HuggingGPT adopts a more open approach by assigning and organizing tasks based on model descriptions. Literally, the descriptions that people are putting about their models in Hugging Face are how ChatGPT, the brain of it, is deciding which model to use for which task.

It’s really fantastic how they thought about doing this and architecting this open and continuous manner, which brings us one step closer to realizing Artificial General Intelligence, whether you think we need a pause or not.

This does bring us one step closer because this architecture allows models to do what they do best, whether you’re a fine-tuned model or a generalized model. The paper provides many different examples of how it works and how it can be used.